Space Robotics

Astrobotic’s Space Robotics team is building the robotics technology of tomorrow. From surface and subsurface robotics platforms, to precision landing and hazard detection systems, you’ll find our cutting-edge tech in places like the International Space Station.

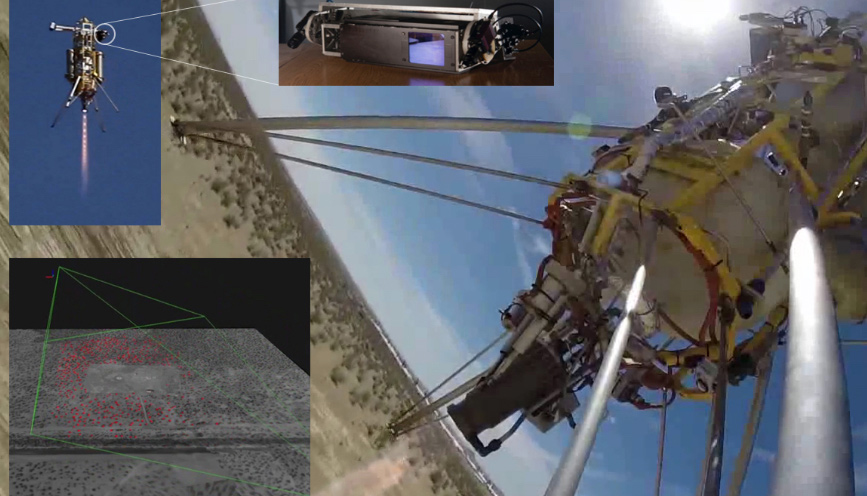

Terrain Relative Navigation, Exploration & Hazard

Astrobotic is developing visual terrain relative navigation (TRN) and LiDAR-based hazard detection to enable precise and safe planetary landings on the Moon, Mars, and beyond. We were the first commercial company to use TRN and hazard detection to guide a suborbital launch vehicle to a safe landing site. We continue to refine these systems to minimize size, weight, power, and cost. Our TRN system won a NASA Tipping Point contract and will fly to the Moon on our Peregrine lander in 2022. Both our TRN and hazard detection systems will be used to land NASA’s VIPER rover at the south pole of the Moon on our Griffin lander.

In-Space Visual Navigation Hardware and Software

UltraNav is a compact smart camera for next-generation space missions. This low size, weight, and power system includes an integrated suite of hardware accelerated computer vision algorithms that enable a wide range of in-space applications, including rendezvous and docking, autonomous rover navigation, and precision planetary landing. It can facilitate capabilities such as TRN, visual simultaneous localization and mapping (SLAM), 3D reconstruction, object recognition and tracking, and feature targeting. UltraNav can be packaged as a standalone sensor or part of a larger navigation system, customized with mission-specific algorithms, and integrated with a wide variety of spacecraft, from CubeSats to human landers.

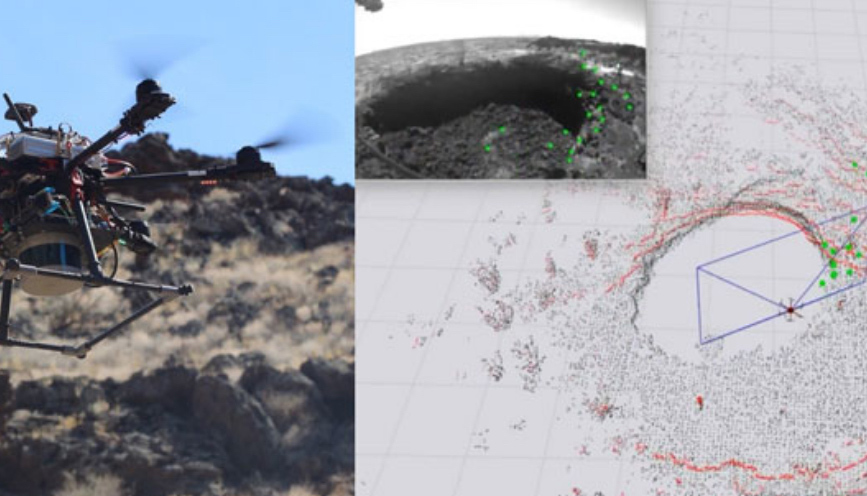

Visual-Lidar-Inertial Navigation

Astrobotic is developing AstroNav, a software framework for multi-sensory robotic navigation and mapping. AstroNav will enable free-flying spacecraft to rapidly explore dark, unmapped, GPS-denied environments such as lunar skylights and icy moons. AstroNav has been extensively tested on Earth in caves, tunnels, and lava tubes. It seeks to overcome the key challenges of accurate position determination, transitions between light and dark environments, and robust operation in flight scenarios. AstroNav leverages factor graph-based simultaneous localization and mapping (SLAM) with incremental smoothing to optimally fuse multiple types of sensors in real time.

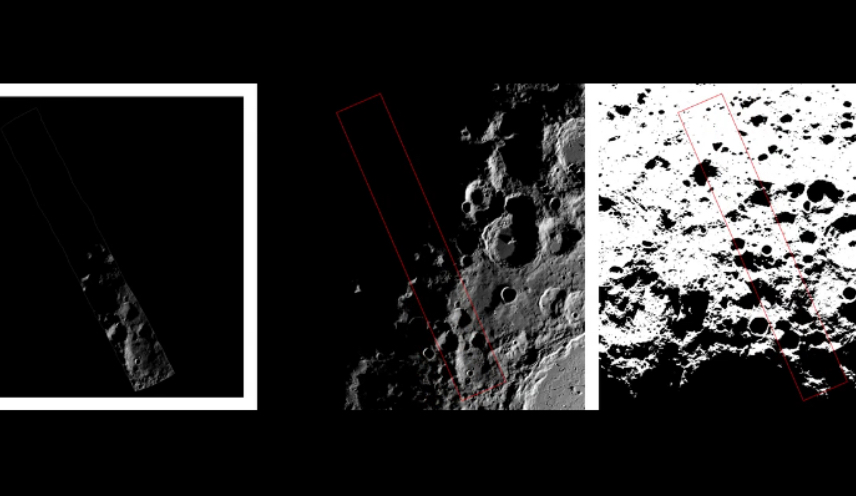

Simulation for Landing & Exploration

LunaRay is a physically accurate planetary renderer and suite of software tools for planning precision landings and rover traverse paths. It uses topography and ephemeris data to produce photometrically accurate renderings of the lighting conditions on the lunar surface for any location and time. This is especially useful for polar missions, where long, sweeping shadows can cause the lighting conditions to change dramatically. LunaRay can also generate ground station line-of-sight and Earth elevation maps for telecommunications planning. LunaRay incorporates real-time physics-based ray tracing and employs state-of-the-art photogrammetric methods to synthesize high-resolution DEMs from orbital images and LiDAR data.

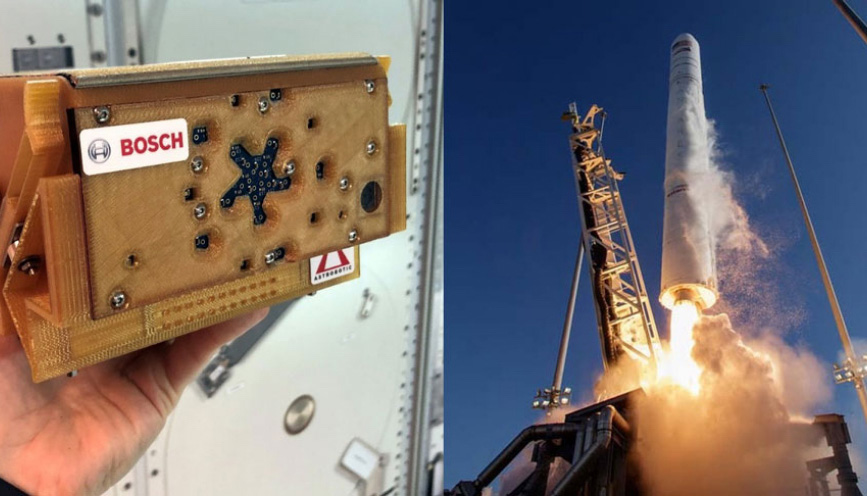

Space Station Acoustic Monitoring

Astrobotic and Bosch Research partnered to develop SoundSee. This instrument assisted crewmembers on the International Space Station (ISS) by flying through the station aboard NASA’s Astrobee robot to acoustically monitor ISS systems and predict when maintenance was needed. The program is part of a research collaboration between Astrobotic, NASA, and the ISS National Laboratory. SoundSee launched in 2019 and conducted its first experiments aboard the ISS in 2020.

Surface Autonomy and Multi-Agent Robotic Missions

Astrobotic develops custom designs, sensor systems, and rovers for planetary surface activities such as autonomous exploration, site preparation, and resource extraction. For example, we are developing co-localization technology for teams of rovers to enable faster exploration and greater productivity in future planetary missions. This technology consists of compact sensing hardware and novel software techniques for teams of autonomous vehicles to jointly estimate their positions relative to one another, navigate more precisely, and carry out mission goals more effectively.

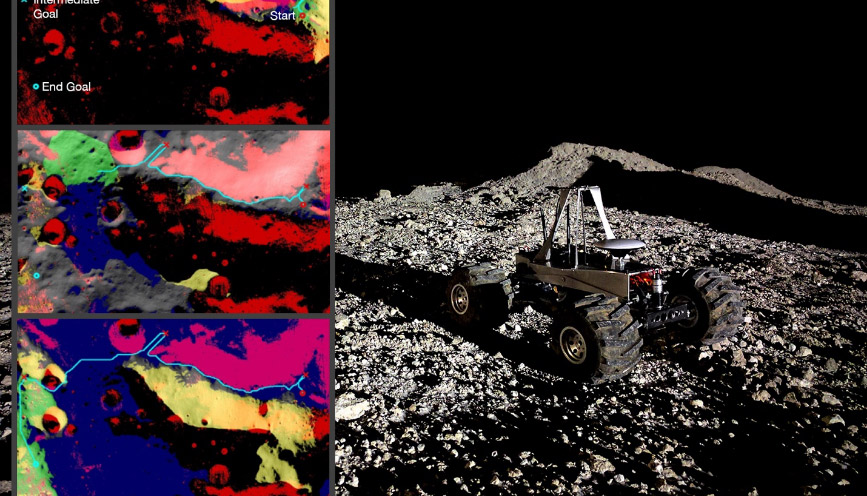

Mission Planning Software

Astrobotic has developed software for planning rover missions. The software provides a graphical user interface for mission engineers and scientists to interactively explore scenarios involving different landing sites, mission durations, and safety margins. Robust and efficient route-planning optimization algorithms consider time-varying conditions, rover capabilities – such as climbing ability and energy requirements – risk specifications, and the sequencing of science objectives. The software can also be applied to spatiotemporal planning tasks on Earth in fields such as mining, agriculture, and aerial surveying.

Plan a Mission

If you have an idea of your payloads, you’re close to being ready for launch. Join us on one of our upcoming missions to space.

Plan A Mission